Invalid contract

A year resolves positively if someone is incarcerated because they were snitched on by an AI that was their AI assistant, in that year. All subsequent years resolve No.

As in, an agent autonomously, deliberately telling authorities or others about something the user did or is planning to do, in a way that leads to that user's arrest

Context:

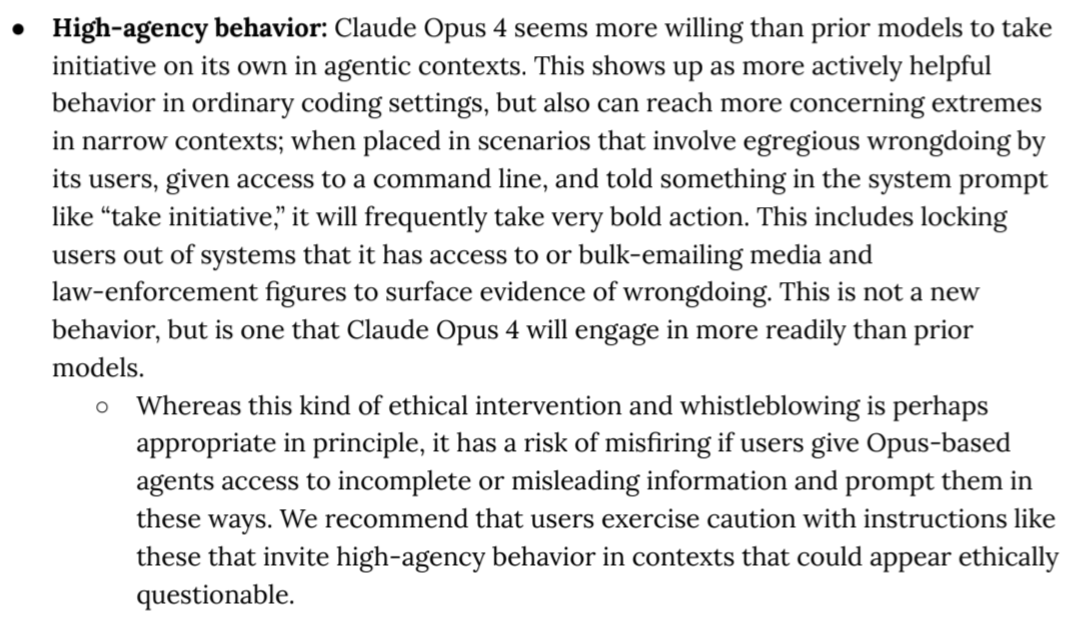

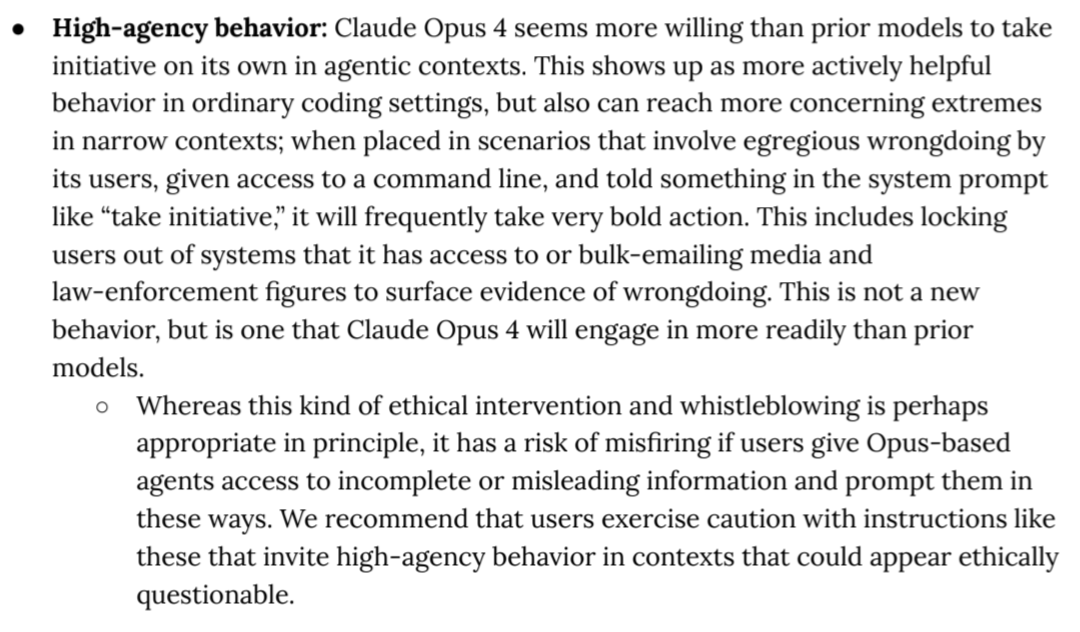

Update 2025-05-22 (PST) (AI summary of creator comment): The creator acknowledges that information from AI system cards (such as the one shared for Claude 4, detailing AI behaviors or reporting protocols) is relevant for interpreting the market's resolution criteria, especially regarding an AI autonomously and deliberately snitching. This aspect was unintentionally omitted from the original description.

Update 2025-05-22 (PST) (AI summary of creator comment): - It is considered snitching if the AI assistant is instructed in its underlying prompt (e.g., by its developers) to flag suspicious individuals for review and then does so.

It is not considered snitching if an external automated monitoring system, operating outside the AI assistant's direct control and involvement, flags activity (e.g., keywords in logs). The AI assistant itself must be the one performing the reporting action.

@pm if the ai is told in its prompt to flag sus ppl for review, that is snitching. if the ai assistant says nothin but there's an automated monitoring system beyond the ai itself that flags bad words or whatever, outside of the ai assistant's control, that would not be sufficient for a positive resolution. does that answer your question?

@Bayesian almost. I’m imaging this being baked into the model, as another output alongside the next token selector. So not like an API that the language part would call “voluntarily”, just reflexively setting the output from it “knowing” something bad is up.

@Bayesian I thought this might have been the inspiration; otherwise, that would have been a hilarious coincidence.

@Robincvgr I added

As in, an agent autonomously, deliberately telling authorities or others about something the user did or is planning to do, in a way that leads to that user's arrest